|

Włodzisław Duch |

|

|

Włodzisław Duch |

|

Computational Intelligence, CI: is a branch of science which tries to solve problems that are effectively nonalgorithmic (such as the semantic retrieval problem).

Artificial Intelligence, AI: is a branch of CI, stressing the importance of knowledge, representation of knwledge, rule-based understanding.

Biological inspirations: neural networks, evolutionary programming,

genetic algorithms.

Logic: fuzzy logic, rough logic, possibility theory

Mathematics: multivariate statistics, classification theory,

clusterization, optimization theory

Pattern recognition: computer vision, speech recognition

Enginering: robotics, control theory, biocybernetics

Computer science: theory of grammatics, automata theory, machine learning

"Soft computing" = {neural networks, evolutionary programming, fuzzy logic}

Useful collections of links:

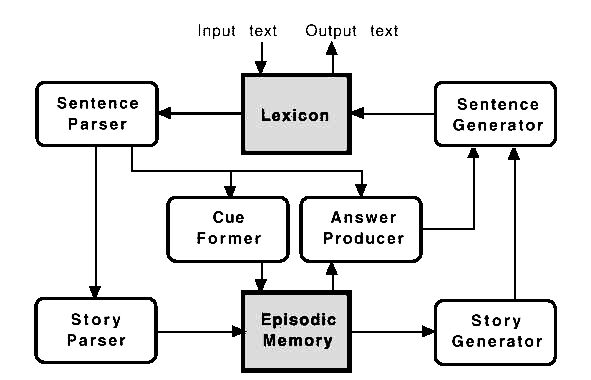

Understanding meaning of sentences, learning from existing texts, dialog with humans, machine translation.

Problems with meaning:

"In managing the DoD there are many unexpected communications problems. For instance, when the Marines are ordered to "secure a building," they form a landing party and assault it. On the other hand, the same instructions will lead the Army to occupy the building with a troop of infantry, and the Navy will characteristically respond by sending a yeoman to assure that the building lights are turned out. When the Air Force acts on these instructions, what results is a three year lease with option to purchase."

-- James Schlesinger (former Secretary of Defense, USA).

Syntacs, grammar, parsing and semantics. Meaning refers to background knowledge. What is knowledge?

Knowledge representation, linguistic (verbal) knowledge structures.

IF good food THEN salivate

Are we using rules? In the army all the time ...

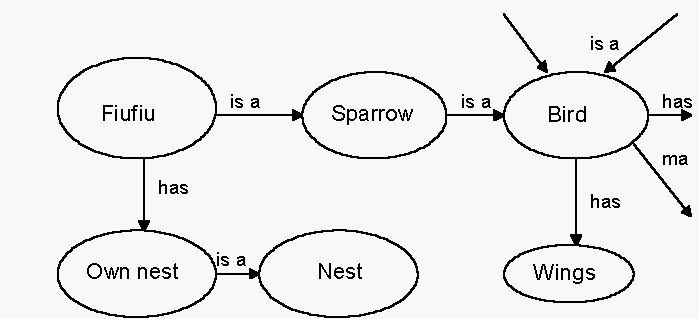

Each network node is a word, connections (arcs) may signify relations

| Generic DOG Frame

Self: an ANIMAL; a PETBreed: ? Owner: a PERSON (if-Needed: find a PERSON with pet=myself) Name: a PROPER NAME (DEFAULT=Rover) |

DOG_NEXT_DOOR Frame Self: a DOG Breed: mutt Owner: Jimmy Name: Fido |

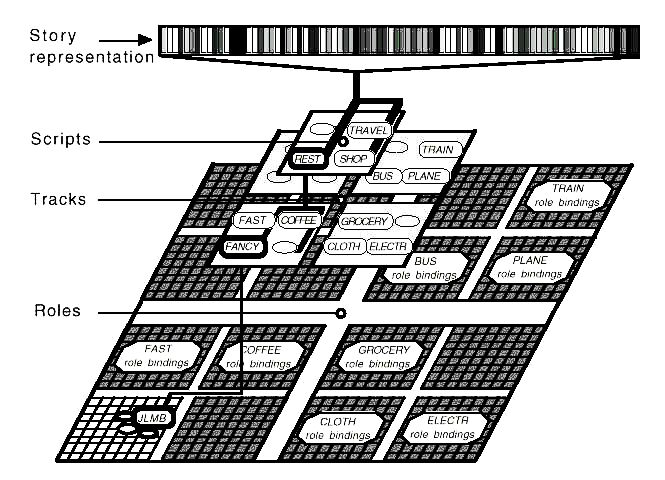

Stereotypic story: restaurants, accidents, business

Many other knowledge representation schemes.

Good part of AI is knowledge

engineering.

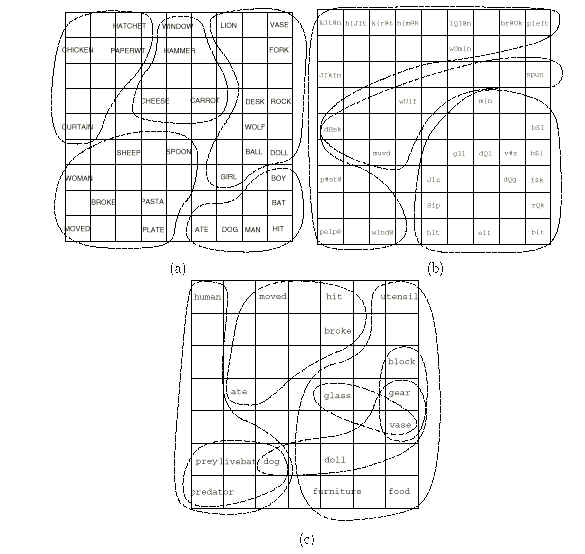

How to show similarity relations between words?

Psychologists: semantic distance from associations or time of reactions.

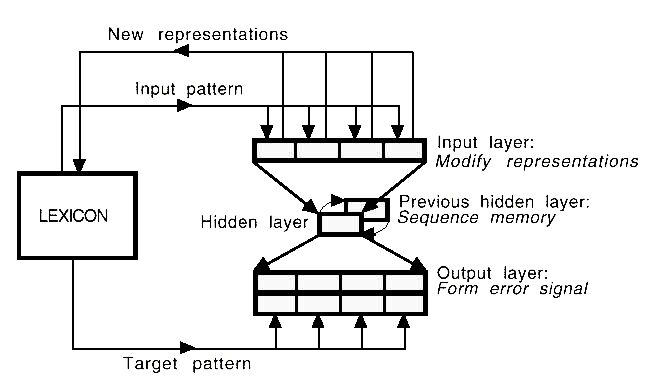

How do we do it with our brains? Neural networks.

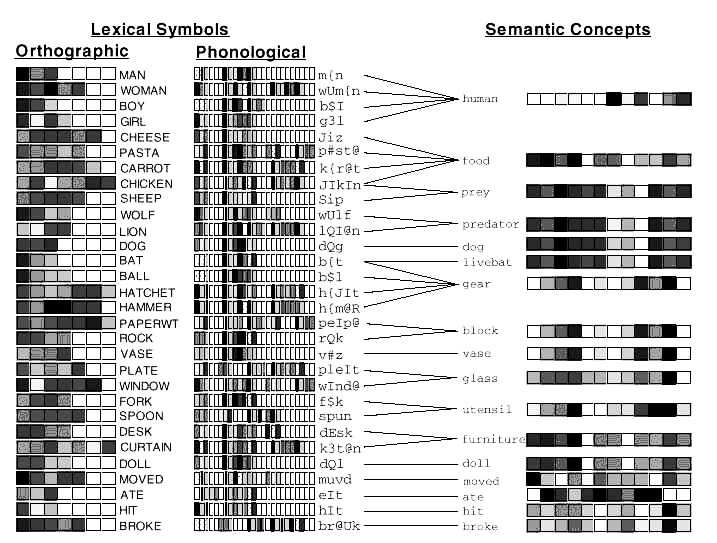

Vector description instead of neural activations - perhaps about 300 dimensions are

sufficient (Latent Semantic Analysis indication).

High similarity of symbols or concepts <=> close in the concept space.

"Platonic mind" model - a few pictures

Semantic maps: 96som-inf.sam SOM map of oil from Italy - ../../g-input/italy.eps

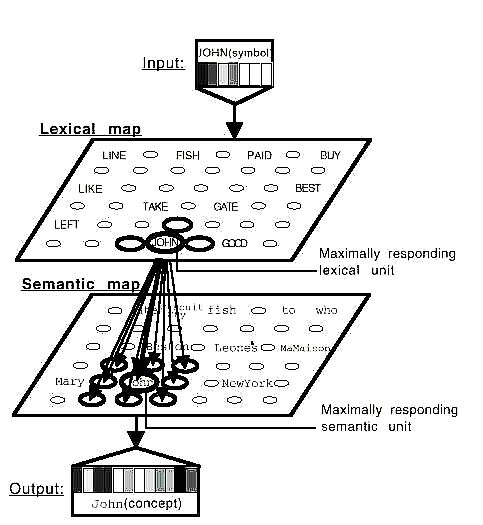

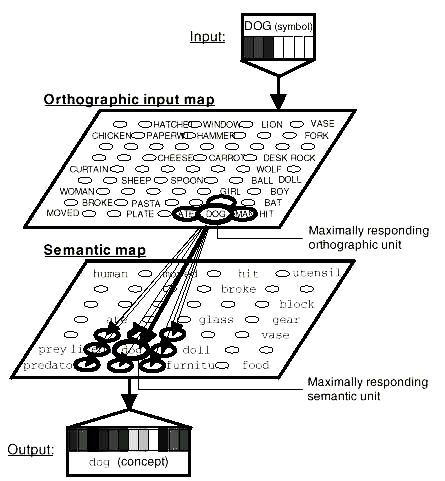

Lexicon propagation. The orthographic input symbol DOG is translated into the semantic concept dog in this example. The representations are vectors of gray-scale values between 0 and 1, stored in the weights of the feature map units. The size of the unit on the map indicates how strongly it responds. Only a few strongest associative connections of the orthographic input unit DOG (and only that unit) are shown.

- see Inxight https://www.inxight.com/

- see the

Altavista Discovery project

Find short logical summary of the database, or show the most important relationships.

Example: Iris, mushrooms